First, some context about VR

Security Operations teams face two fundamental challenges when it comes to 'finding bad'.

The first is gaining and maintaining appropriate visibility into what is happening in our environments. Visibility is provided through data (e.g. telemetry, logs). The trinity of data sources for visibility concern accounts/credentials, devices, and network traffic.

The second challenge is getting good recognition within the scope of what is visible. Recognition is fundamentally about what alerting and workflows you can implement and automate in response to activity that is suspicious or malicious.

Visibility and Recognition each have their own different associated issues.

Visibility is a problem about what is and can be generated and either read as telemetry, or logged and stored locally, or shipped to a central platform. The timelines and completeness of what visibility you have can depend on factors such as how much data you can or can't store locally on devices that generate data - and for how long; what your data pipeline and data platform look like (e.g. if you are trying to centralise data for analysis); or the capability of host software agents you have to process certain information locally.

The constraints on visibility sets the bar for factors like coverage, timelines and completeness of what recognition you can achieve. Without visibility, we cannot recognize at all. With limited visibility, what we can recognize may not have much value. With the right visibility, we can still fail to recognise the right things. And with too much recognition, we can quickly overload our senses.

A good example of a technology that offers the opportunity to solve these challenges at the network layer is Darktrace. Their technology provides visibility, from a network traffic perspective, into data that concerns devices and the accounts/credentials associated with them. They then provide recognition on top of this by using Machine Learning (ML) models for anomaly detection. Their models alert on a wide range of activities that may be indicative of threat activity, (e.g. malware execution and command and control, a technical exploit, data exfiltration and so on).

The major advantage they provide, compared to traditional Intrusion Detection Systems (IDS) and other vendors who also use ML for network anomaly detection, is that you can a) adjust the sensitivity of their algorithms and b) build your own recognition for particular patterns of interest. For example, if you want to monitor what connections are made to one or two servers, you can set up alerts for any change to expected patterns. This means you can create and adjust custom recognition based on your enterprise context and tune it easily in response to how context changes over time.

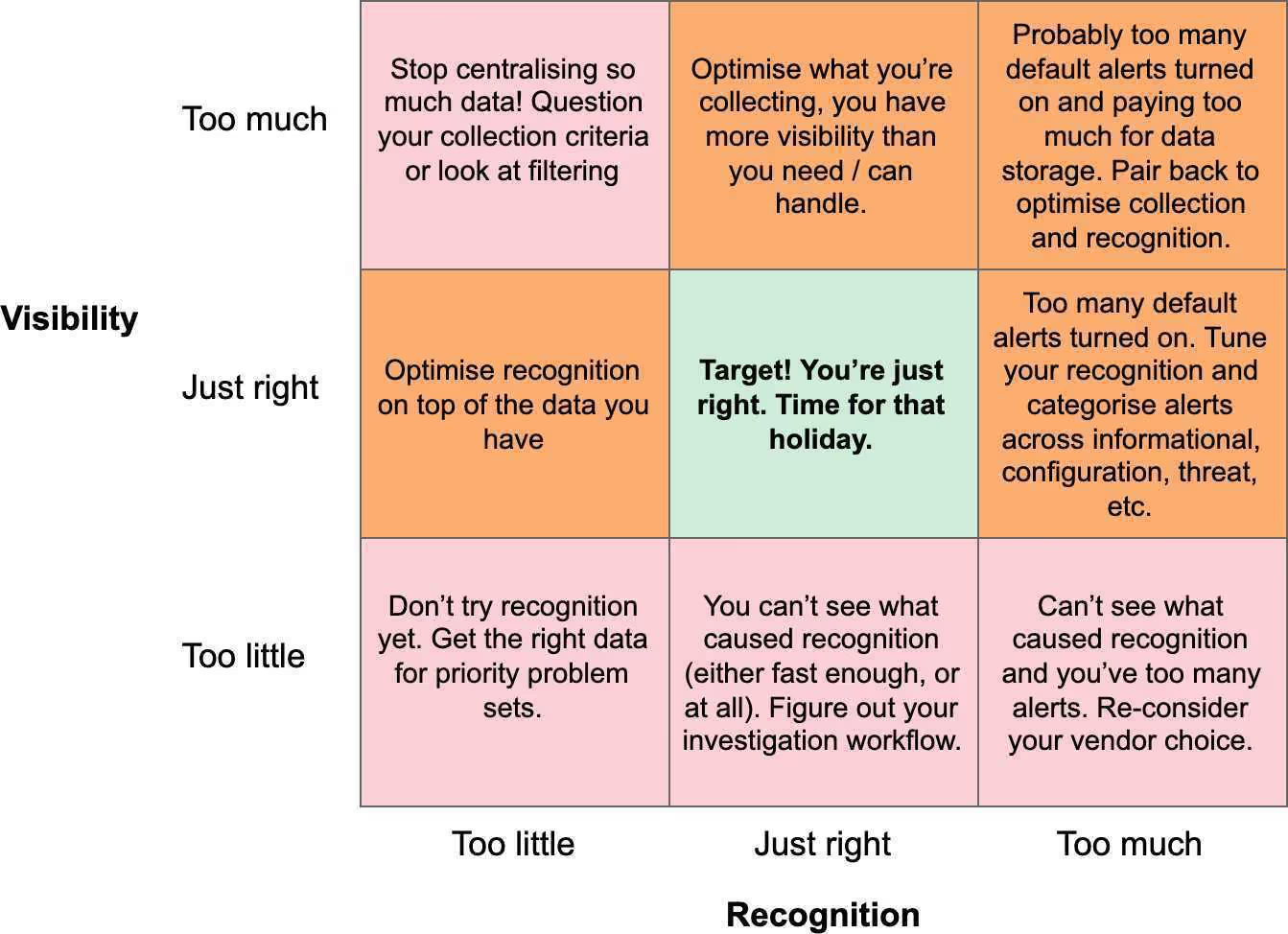

The Goldilocks VR Matrix

Below is what we call the VR Goldilocks Matrix at PBX Group Security. We use it to assess technology, measure our own capability and processes, and ask ourselves hard questions about where we need to focus to get the most value from our budget, (or make cuts / shift investment) if we need to.

In the squares are some examples of what you (maybe) should think about doing if you find yourself there.

Important questions to ask about VR

One of the things about Visibility and Recognition is that it’s not a given they are ‘always on’. Sometimes there are failure modes for visibility (causing a downstream issue with recognition). And sometimes there are failure modes or conditions under which you WANT to pause recognition.

The key questions you must have answers to about this include:

- Under what conditions might I lose visibility?

- How would I know if I have?

- Is that loss a blind spot (i.e. data is lost for a given time period)…

- …or is it 'a temporal delay’ (e.g. a connection fails and data is batched for moving from A to B but that doesn’t happen for a few hours)?

- What are the recognitions that might be impacted by either of the above?

- What is my expectation for the SLA on those recognitions from ‘cause of alert’ to ‘response workflow’?

- Under what conditions would I be willing to pause recognition, change the workflow for what happens upon recognition, or stop it all together?

- What is the stacked ranked list of ‘must, should, could’ for all recognition and why?

Alerts. Alerts everywhere.

More often than not, Security Operations teams suffer the costs of wasted time due to noisy alerts from certain data sources. As a consequence, it's more difficult for them to single out malicious behavior as suspicious or benign. The number of alerts that are generated due to out of the box SIEM platform configurations for sources like Web Proxies and Domain Controllers are often excessive, and the cost to tune those rules can also be unpalatable. Therefore, rather than trying to tune alerts, teams might make a call to switch them off until someone can get around to figuring out a better way. There’s no use having hypothetical recognition, but no workflow to act on what is generate (other than compliance).

This is where technologies that use ML can help. There are two basic approaches...

One is to avoid alerting until multiple conditions are met that indicate a high probability of threat activity. In this scenario, rather than alerting on the 1st, 2nd, 3rd and 4th ‘suspicious activities’, you wait until you have a critical mass of indicators, and then you generate one high fidelity alert that has a much greater weighting to be malicious. This requires both a high level of precision and accuracy in alerting, and naturally some trade off in the time that can pass before an alert for malicious activity is generated.

The other is to alert on ‘suspicious actives 1-4' and let an analyst or automated process decide if this merits further investigation. This approach sacrifices accuracy for precision, but provides rapid context on whether one, or multiple, conditions are met that push the machine(s) up the priority list in the triage queue. To solve for the lower level of accuracy, this approach can make decisions about how long to sustain alerting. For example, if a host triggers multiple anomaly detection models, rather than continue to send alerts (and risk the SOC deciding to turn them off), the technology can pause alerts after a certain threshold. If a machine has not been quarantined or taken off the network after 10 highly suspicious behaviors are flagged, there is a reasonable assumption that the analyst will have dug into these and found the activity is legitimate.

Punchline 1: the value of Continued Recognition even when 'not malicious'

The topic of paused detections was raised after a recent joint exercise between PBX Group Security and Darktrace in testing Darktrace’s recognition. After a machine being used by the PBX Red Team breached multiple high priority models on Darktrace, the technology stopped alerting on further activity. This was because the initial alerts would have been severe enough to trigger a SOC workflow. This approach is designed to solve the problem of alert overload on a machine that is behaving anomalously but is not in fact malicious. Rather than having the SOC turn off alerts for that machine (which could later be used maliciously), the alerts are paused.

One of the outcomes of the test was that the PBX Detect team advised they would still want those alerts to exist for context to see what else the machine does (i.e. to understand its pattern of life). Now, rather than pausing alerts, Darktrace is surfacing this to customers to show where a rule is being paused and create an option to continue seeing alerts for a machine that has breached multiple models.

Which leads us on to our next point…

Punchline 2: the need for Atomic Tests for detection

Both Darktrace and Photobox Security are big believers in Atomic Red Team testing, which involves ‘unit tests’ that repeatedly (or at a certain frequency) test a detection using code. Unit tests automate the work of Red Teams when they discovery control strengths (which you want to monitor continuously for uptime) or control gaps (which you want to monitor for when they are closed). You could design atomic tests to launch a series of particular attacks / threat actor actions from one machine in a chained event. Or you could launch different discreet actions from different machines, each of which has no prior context for doing bad stuff. This allows you to scale the sample size for testing what recognition you have (either through ML or more traditional SIEM alerting). Doing this also means you don't have to ask Red Teams to repeat the same tests again, allowing them to focus on different threat paths to achieve objectives.

Mitre Att&ck is an invaluable framework for this. Many vendors are now aligning to Att&ck to show what they can recognize relating to attack TTPs (Tools, Tactics and Procedures). This enables security teams to map what TTPs are relevant to them (e.g. by using threat intel about the campaigns of threat actor groups that are targeting them). Atomic Red Team tests can then be used to assure that expected detections are operational or find gaps that need closing.

If you miss detections, then you know you need to optimise the recognition you have. If you get too many recognitions outside of the atomic test conditions, you either have to accept a high false positive rate because of the nature of the network, or you can tune your detection sensitivity. The opportunities to do this with technology based on ML and anomaly detection are significant, because you can quickly see for new attack types what a unit test tells you about your current detections and that coverage you think you have is 'as expected'.

Punchline 3: collaboration for the win

Using well-structured Red Team exercises can help your organisation and your technology partners learn new things about how we can collectively find and halt evil. They can also help defenders learn more about good assumptions to build into ML models, as well as covering edge cases where alerts have 'business intelligence' value vs ‘finding bad’.

If you want to understand the categorisations of ways that your populations of machines act over time, there is no better way to do it than through anomaly detection and feeding alerts into a system that supports SOC operations as well as knowledge management (e.g. a graph database).

Working like this means that we also help get the most out of the visibility and recognition we have. Security solutions can be of huge help to Network and Operations teams for troubleshooting or answering questions about network architecture. Often, it’s just a shift in perspective that unlocks cross-functional value from investments in security tech and process. Understanding that recognition doesn’t stop with security is another great example of where technologies that let you build your own logic into recognition can make a huge difference above protecting the bottom line, to adding top line value.