AI is being embedded in SaaS applications, autonomous agents take actions in real time, and low- or no-code tools let teams build their own AI automations across the enterprise. But while adoption and innovation accelerate, security is still catching up. AI systems behave in ways that traditional defenses were never designed to monitor, and security leaders are increasingly expressing concern:

Securing AI in the Enterprise

AI is accelerating faster than governance can keep up, expanding attack surfaces and creating unseen risks. From data and models to AI agents and integrations, security starts by knowing what to protect.

Security professionals are increasingly concerned about the rise of AI

44%

are extremely or very concerned with the security implications of third-party LLMs (like Copilot or ChatGPT)

92%

are concerned about the use of AI agents across the workforce and their impact on security

How concerned are you about the security implications of the following AI technologies in your organization’s computing environment?

Number of respondents extremely or very concerned about the security impact of different kinds of AI use

Number of respondents extremely or very concerned about the security impact of different kinds of AI use

Number of respondents extremely or very concerned about the security impact of different kinds of AI use

Number of respondents extremely or very concerned about the security impact of different kinds of AI use

Number of respondents extremely or very concerned about the security impact of different kinds of AI use

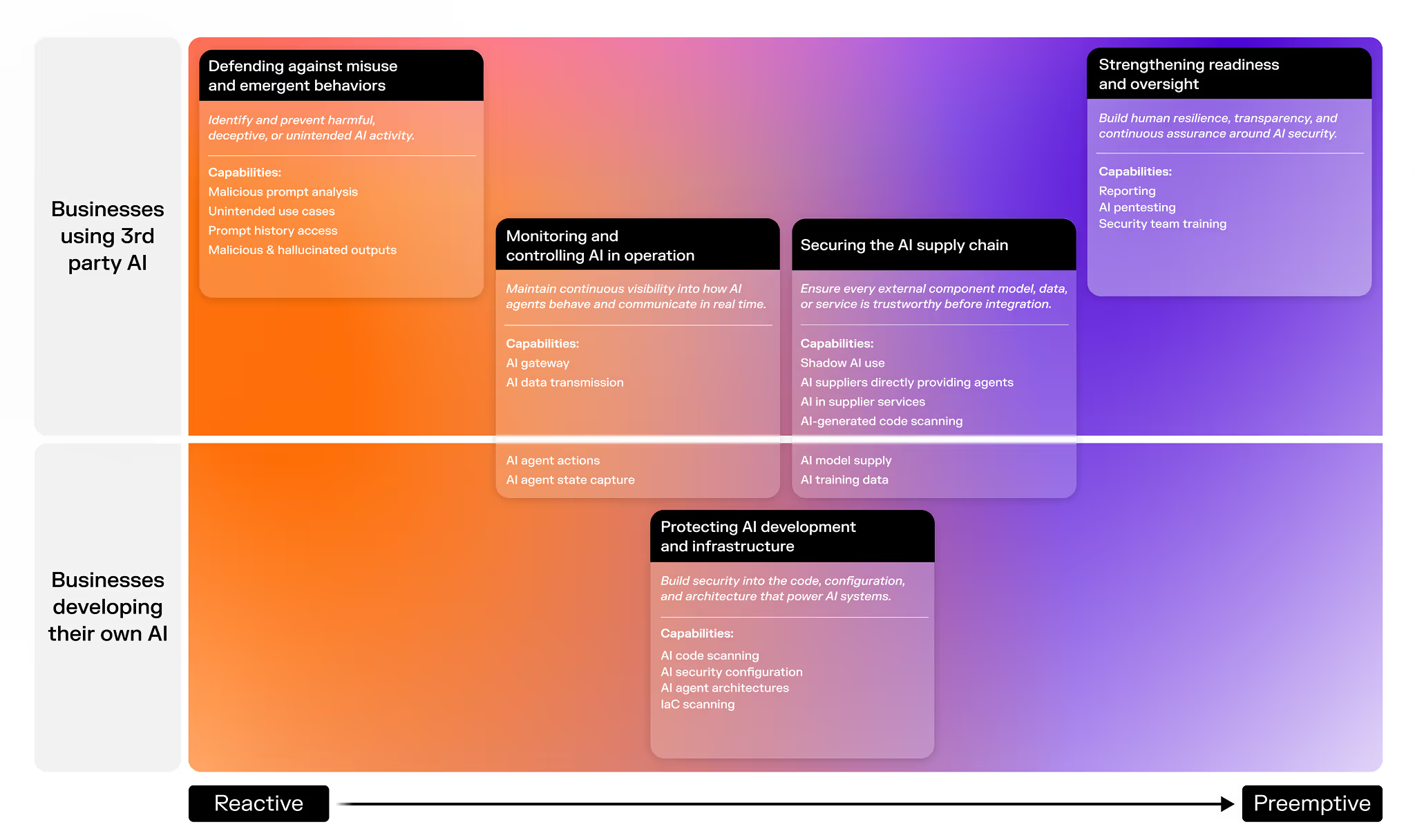

Five categories for

securing AI

AI introduces risk through both third-party tools and internally developed systems. This framework shows how those risks span five categories, from preemptive controls in AI development to reactive measures in live operations, helping security leaders visualize where protection is needed across the enterprise.

AI adoption rapidly expands the attack surface

Autonomous and generative AI agents

Systems capable of independent action can operate beyond human guardrails or monitoring.

Embedded AI in SaaS and productivity tools

Employees use AI features that process sensitive data outside direct security visibility.

Cloud and on-prem providers extending services with AI

New embedded functions increase complexity and expand the perimeter of control.

Vendors and acquired software adding AI features

Third-party tools introduce AI capabilities without clear oversight or risk evaluation.

Identifying AI risks is essential for effective governance and security

Every AI system brings its own set of uncertainties, ranging from data leakage and model bias to regulatory exposure. Recognizing these risks is the first step toward building AI governance frameworks that ensure AI operates safely, transparently, and in alignment with business objectives.

Secure AI Adoption without Compromise

As Gen AI tools became embedded in enterprise workflows, and AI agents gained more access to data and systems, discover how our latest innovations in prompt security, identity & access management, and shadow AI risk management can help you embrace AI with confidence.

Accelerate AI innovation with Darktrace / SECURE AI

AI manifests in various ways: employee productivity tools, cloud services, vendor solutions, and internally developed systems. Darktrace brings together full visibility, intelligent behavioral oversight, and real‑time control across both human and machine interactions — giving teams the confidence to adopt, experiment with, and scale AI safely.

Get a real‑time picture of how prompts, agents, identities, and data interact so nothing falls through the cracks

Spot complex, fast‑moving forms of AI misuse that traditional guardrails miss, enabling timely, meaningful intervention.

Catch unusual activity the moment it appears, and ensure AI supports security, compliance, and business goals — without adding friction.

Understand what it really means to secure AI in the enterprise

Discover how to identify AI-driven risks, so you can establish AI governance frameworks and controls that secure innovation without exposing the enterprise to new attack surfaces.

Deepen your understanding of AI-driven cybersecurity

AI is reshaping how organizations defend, detect, and govern. These resources offer expert insights, from evaluating AI tools and building responsible governance to understanding your organization’s maturity and readiness. Discover practical frameworks and forward-looking research to help your business innovate securely.

Build trust through transparency and responsible innovation

How do you balance innovation with governance and security? Explore the principles guiding ethical, explainable AI in cyber.

Discover the spectrum of AI types in cybersecurity

Understand the tools behind modern resilience, from supervised machine learning to NLP, and how they work together to stop emerging threats.

Benchmark your AI adoption against the industry in cyber

The AI Maturity Model helps CISOs and security teams assess current capabilities and chart a roadmap toward autonomous, resilient security operations.

See how security leaders feel about the impact of AI

We surveyed 1500 cybersecurity pros around the world to uncover trends on AI threats, agents and security tools.

Become an expert in securing AI – join the Readiness Program

AI is moving faster than your security. We’re about to change that.

The Securing AI Readiness Program by Darktrace is designed to bring together a cohort of IT and security leaders who are confronted with the common experience of securing AI tools at their organizations.

The information you’ll receive in this program has been designed to help security and IT professionals anticipate, understand, and prepare for the risks and realities of AI adoption, from security and governance to operational and reputational impact so they can clearly advise the business, set guardrails for responsible AI use, and guide adoption with confidence at a time when uncertainty is the norm.

Register your interest today

Thank you for registering, and congratulations on your first step to becoming an expert in securing AI in the enterprise. We'll be in touch soon giving you early access to product updates, exclusive thought leadership content, and community experiences with leaders navigating similar challenges.

FAQs

dentifying AI security risks involves understanding how AI systems interact with data, users, and connected technologies. Continuous AI monitoring across models, prompts, and integrations helps reveal anomalies or unintended behavior. Combined with AI security posture management, this provides a clear picture of system integrity as AI environments evolve.

AI governance and AI risk management frameworks define how AI is controlled, audited, and aligned with organizational and regulatory expectations. Standards such as the NIST AI Risk Management Framework (RMF) and ISO/IEC 42001 emphasize transparency, accountability, and consistency, ensuring that AI systems are managed responsibly across their lifecycle.

Responsible AI refers to the secure and ethical use of AI technologies in alignment with established governance and regulatory principles. In cybersecurity, it focuses on ensuring transparency, traceability, and trust so that AI-driven decisions and actions can be understood, validated, and safely integrated into enterprise systems.

AI monitoring encompasses the continuous observation of model behavior, data flows, and system outputs to identify unexpected or unauthorized activity. It provides ongoing visibility into how AI operates within its environment, helping maintain confidence in accuracy, security, and compliance over time.

AI security posture management (AI-SPM) is the process of maintaining and evaluating the configuration, policy, and control environment of AI systems. It provides continuous insight into security readiness, allowing teams to detect misconfigurations or emerging risks before they affect broader operations.

AI supply chain security focuses on validating the integrity of external AI components—such as models, datasets, and APIs—that are introduced into an organization. It helps ensure that external sources are legitimate, properly licensed, and free from malicious or manipulated content.

Securing AI systems means addressing risks throughout the AI lifecycle, including model creation, training, deployment, and ongoing operation. This involves protecting data, monitoring system behavior, and maintaining governance structures that preserve trust and reliability.

AI model risk describes the potential for models to produce biased, inaccurate, or harmful outcomes due to poor data quality, design flaws, or manipulation. Managing model risk depends on the reliability of training data, the accuracy of performance metrics, and the transparency of model behavior.