Shadow AI

What is shadow AI?

The adoption of artificial intelligence across enterprises is accelerating faster than most governance frameworks can accommodate. Employees are discovering that AI tools can help them write emails, summarize documents, debug code, and complete tasks more efficiently. The challenge for security teams lies in where and how AI is being used.

When AI tools operate outside official IT oversight, they create pathways for sensitive data to leave the organization, often without anyone realizing it has happened. Understanding and addressing shadow AI has become essential to maintaining a secure digital environment.

Shadow AI refers to the unsanctioned use of artificial intelligence tools by employees or departments without the knowledge, approval, or oversight of IT and security teams. These are any tools that have not been vetted through official procurement and security review processes, including:

- Generative AI platforms

- Large language models (LLMs)

- Coding assistants

- AI-enabled productivity applications

According to a 2025 survey, 78% of employees admit to using AI tools that were not approved by their employer. Shadow AI use is typically well-intentioned. Employees want to work faster and solve problems more efficiently. However, when these tools bypass governance controls, they introduce risks that traditional security measures were never designed to address.

Shadow AI shares similarities with traditional Shadow IT but presents distinct challenges because of how AI systems process, store, and potentially learn from the data they receive. Unlike a simple file-sharing application, an AI tool may retain information submitted through prompts and use it to improve its models, effectively publishing proprietary data beyond organizational boundaries.

Common shadow AI risks

The shadow AI risks facing enterprises extend across data security, regulatory compliance, and emerging threats from autonomous AI systems. Understanding these risk categories helps security teams prioritize their response strategies.

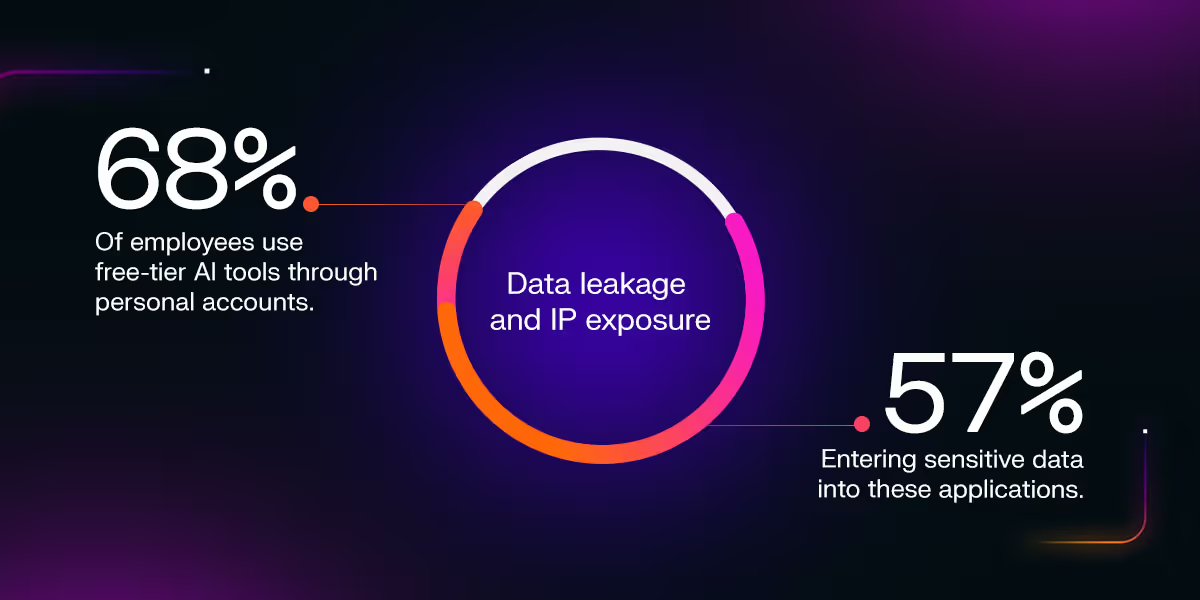

Data leakage and IP exposure

When employees paste customer information, source code, financial data, or strategic documents into unapproved AI tools, that data leaves the organization's control. Many free-tier AI services lack enterprise-grade data protections and may use submitted content for model training.

According to Menlo Security's 2025 report, 68% of employees use free-tier AI tools through personal accounts, with 57% entering sensitive data into these applications. This use creates direct exposure pathways for confidential information, customer records, and proprietary intellectual property. Organizations may unknowingly void IP protections, violate privacy commitments, or breach contractual obligations with partners.

Regulatory noncompliance

Using unvetted AI tools often means bypassing data residency requirements like General Data Protection Regulation (GDPR), HIPAA, and California Consumer Privacy Act (CCPA) mandates. When data flows through AI services that have not been reviewed for compliance, organizations face immediate audit failures and potential enforcement actions.

IBM's 2025 Cost of a Data Breach Report indicates that shadow AI-associated data breaches cost organizations more than $670,000 on average, reflecting both the direct costs of incidents and the regulatory penalties that follow.

Agentic risks and emerging threats

As AI capabilities evolve, so do the risks. Agentic AI refers to autonomous AI systems that take actions rather than simply answering questions. These systems can send emails, modify code, access databases, and execute tasks without human-in-the-loop oversight.

When employees deploy unapproved agentic AI tools, the potential consequences multiply. An autonomous agent operating without proper guardrails could exfiltrate data, make unauthorized changes to systems, or interact with external services in ways that expose the organization to further compliance.

Common examples of Shadow AI use in the enterprise

Shadow AI use is common across every department, often in scenarios that seem harmless on the surface but carry significant risk.

- Development and engineering teams: Developers paste code blocks into chatbots for debugging or optimization, potentially exposing proprietary algorithms.

- Marketing and content: Teams upload customer lists or strategy documents to generate email copy or reports.

- HR and legal: These departments summarize sensitive contracts or candidate evaluations using free public tools.

- Finance: Teams analyze budget projections and confidential financial data through unapproved AI platforms.

In each scenario, well-meaning employees use AI to increase their productivity while unknowingly creating visibility gaps and potential exposure pathways for organizational data.

Why "banning" doesn't work and what to do instead

Outright bans on shadow AI use rarely achieve their intended purpose. Strict prohibitions often drive usage underground or onto personal devices, reducing visibility while doing little to address the underlying demand for productivity tools.

Security teams cannot assess risk, enforce policy, or protect organizational data when they cannot see which tools are in use. When AI use shifts to personal accounts and mobile devices, the organization loses any ability to monitor data flows or enforce policy. Traditional firewalls and Cloud Access Security Brokers frequently miss AI traffic because it resembles standard web activity.

The more effective approach combines governance with enablement. Rather than blocking all AI usage, forward-thinking organizations establish frameworks that allow safe tools while monitoring risky behaviors. This governance-first approach treats shadow AI as a symptom of unmet needs rather than a problem to be suppressed.

How AI-powered cybersecurity solutions boost control

AI-powered cybersecurity solutions address the shadow AI challenge by providing capabilities that traditional security tools lack. Rather than relying on static blocklists or known signatures, these platforms use behavioral analysis to identify anomalous activity across the digital estate.

- Comprehensive visibility: An AI-powered solution, such as the Darktrace / SECURE AI, brings visibility into AI usage patterns, allowing security teams to see which tools employees are accessing, what data is flowing to external services, and where potential policy violations are occurring.

- Behavioral detection: A robust AI-powered solution analyzes how data moves through the organization rather than just where it goes. When unusual patterns emerge, such as large volumes of sensitive data being uploaded to a new AI endpoint, security teams receive alerts that enable rapid response.

- Context-aware control: With AI, teams can enforce nuanced policies that balance security with productivity rather than blanket bans. For example, permitting general AI usage while restricting the upload of source code or customer data.

Learn more about combating shadow AI

Shadow AI reflects a broader reality of innovation outpacing security governance. The goal for cybersecurity professionals is to enable the business to use AI safely while maintaining visibility and control over organizational data.

Addressing this challenge requires understanding where AI is being used, what risks different usage patterns present, and how to implement controls that protect sensitive information without impeding legitimate productivity gains.

Security teams can explore how Darktrace's ActiveAI Security Platform provides the visibility needed to address shadow AI and other emerging threats across the digital estate. For a comprehensive framework on securing AI across your company, including detailed guidance on shadow AI detection, supply chain risks, and governance best practices, explore Darktrace's report on Securing AI in the Enterprise.