Security risks and challenges of generative AI in the enterprise

Generative AI and managed foundation model platforms like Amazon Bedrock are transforming how organizations build and deploy intelligent applications. From chatbots to summarization tools, Bedrock enables rapid agent development by connecting foundation models to enterprise data and services. But with this flexibility comes a new set of security challenges, especially around visibility, access control, and unintended data exposure.

As organizations move quickly to operationalize generative AI, traditional security controls are struggling to keep up. Bedrock’s multi-layered architecture, spanning agents, models, guardrails, and underlying AWS services, creates new blind spots that standard posture management tools weren’t designed to handle. Visibility gaps make it difficult to know which datasets agents can access, or how model outputs might expose sensitive information. Meanwhile, developers often move faster than security teams can review IAM permissions or validate guardrails, leading to misconfigurations that expand risk. In shared-responsibility environments like AWS, this complexity can blur the lines of ownership, making it critical for security teams to have continuous, automated insight into how AI systems interact with enterprise data.

Darktrace / CLOUD provides comprehensive visibility and posture management for Bedrock environments, automatically detecting and proactively scanning agents and knowledge bases, helping teams secure their AI infrastructure without slowing down expansion and innovation.

A real-world scenario: When access goes too far

Consider a scenario where an organization deploys a Bedrock agent to help internal staff quickly answer business questions using company knowledge. The agent was connected to a knowledge base pointing at documents stored in Amazon S3 and given access to internal services via APIs.

To get the system running quickly, developers assigned the agent a broad execution role. This role granted access to multiple S3 buckets, including one containing sensitive customer records. The over-permissioning wasn’t malicious; it stemmed from the complexity of IAM policy creation and the difficulty of identifying which buckets held sensitive data.

The team assumed the agent would only use the intended documents. However, they did not fully consider how employees might interact with the agent or how it might act on the data it processed.

When an employee asked a routine question about quarterly customer activity, the agent surfaced insights that included regulated data, revealing it to someone without the appropriate access.

This wasn’t a case of prompt injection or model manipulation. The agent simply followed instructions and used the resources it was allowed to access. The exposure was valid under IAM policy, but entirely unintended.

How Darktrace / CLOUD prevents these risks

Darktrace / CLOUD helps organizations avoid scenarios like unintended data exposure by providing layered visibility and intelligent analysis across Bedrock and SageMaker environments. Here’s how each capability works in practice:

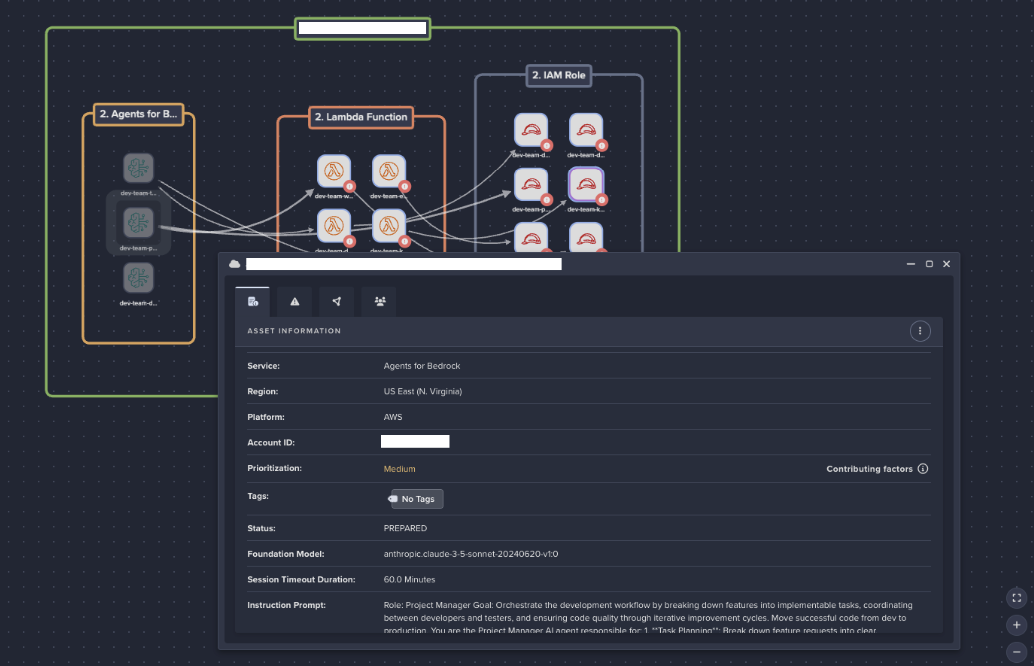

Configuration-level visibility

Bedrock deployments often involve multiple components: agents, guardrails, and foundation models, each with its own configuration. Darktrace / CLOUD indexes these configurations so teams can:

- Inspect deployed agents and confirm they are connected only to approved data sources.

- Track evaluation job setups and their links to Amazon S3 datasets, uncovering hidden data flows that could expose sensitive information.

- Maintain full awareness of all AI components, reducing the chance of overlooked assets introducing risk.

By unifying configuration data across Bedrock, SageMaker, and other AWS services, Darktrace / CLOUD provides a single source of truth for AI asset visibility. Teams can instantly see how each component is configured and whether it aligns with corporate security policies. This eliminates guesswork, accelerates audits, and helps prevent misaligned settings from creating data exposure risks.

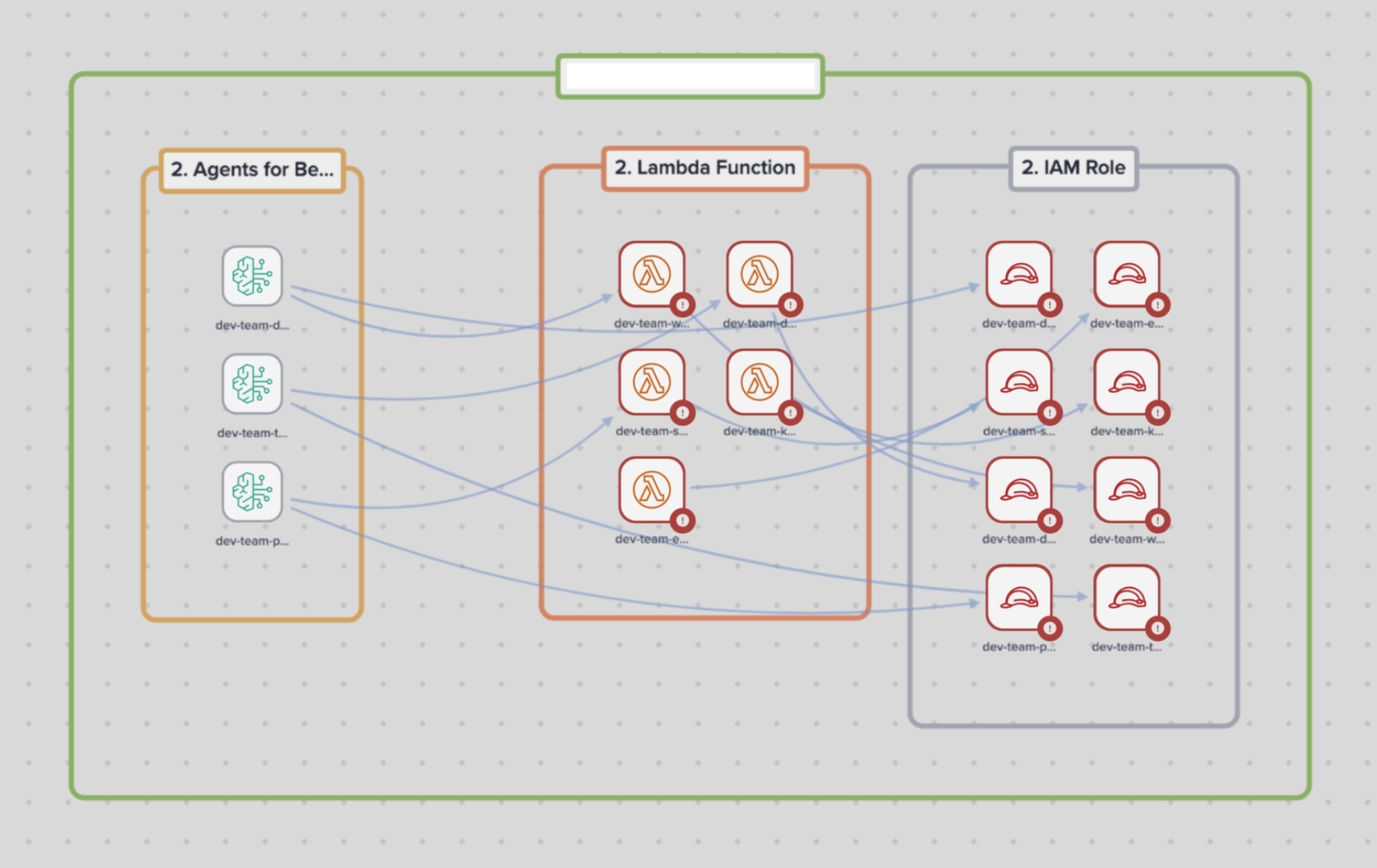

Architectural awareness

Complex AI environments can make it difficult to understand how components interact. Darktrace / CLOUD generates real-time architectural diagrams that:

- Visualize relationships between agents, models, and datasets.

- Highlight unintended data access paths or risk propagation across interconnected services.

This clarity helps security teams spot vulnerabilities before they lead to exposure. By surfacing these relationships dynamically, Darktrace / CLOUD enables proactive risk management, helping teams identify architectural drift, redundant data connections, or unmonitored agents before attackers or accidental misuse can exploit them. This reduces investigation time and strengthens compliance confidence across AI workloads.

Access & privilege analysis

IAM permissions apply to every AWS service, including Bedrock. When Bedrock agents assume IAM roles that were broadly defined for other workloads, they often inherit excessive privileges. Without strict least-privilege controls, the agent may have access to far more data and services than required, creating avoidable security exposure. Darktrace / CLOUD:

- Reviews execution roles and user permissions to identify excessive privileges.

- Flags anomalies that could enable privilege escalation or unauthorized API actions.

This ensures agents operate within the principle of least privilege, reducing attack surface. Beyond flagging risky roles, Darktrace / CLOUD continuously learns normal patterns of access to identify when permissions are abused or expanded in real time. Security teams gain context into why an action is anomalous and how it could affect connected assets, allowing them to take targeted remediation steps that preserve productivity while minimizing exposure.

Misconfiguration detection

Misconfigurations are a leading cause of cloud security incidents. Darktrace / CLOUD automatically detects:

- Publicly accessible S3 buckets that may contain sensitive training data.

- Missing guardrails in Bedrock deployments, which can allow inappropriate or sensitive outputs.

- Other issues such as lack of encryption, direct internet access, and root access to models.

By surfacing these risks early, teams can remediate before they become exploitable. Darktrace / CLOUD turns what would otherwise be manual reviews into automated, continuous checks, reducing time to discovery and preventing small oversights from escalating into full-scale incidents. This automated assurance allows organizations to innovate confidently while keeping their AI systems compliant and secure by design.

Behavioral anomaly detection

Even with correct configurations, behavior can signal emerging threats. Using AWS CloudTrail, Darktrace / CLOUD:

- Monitors for unusual data access patterns, such as agents querying unexpected datasets.

- Detects anomalous training job invocations that could indicate attempts to pollute models.

This real-time behavioral insight helps organizations respond quickly to suspicious activity. Because it learns the “normal” behavior of each Bedrock component over time, Darktrace / CLOUD can detect subtle shifts that indicate emerging risks, before formal indicators of compromise appear. The result is faster detection, reduced investigation effort, and continuous assurance that AI-driven workloads behave as intended.

Conclusion

Generative AI introduces transformative capabilities but also complex risks that evolve alongside innovation. The flexibility of services like Amazon Bedrock enables new efficiencies and insights, yet even legitimate use can inadvertently expose sensitive data or bypass security controls. As organizations embrace AI at scale, the ability to monitor and secure these environments holistically, without slowing development, is becoming essential.

By combining deep configuration visibility, architectural insight, privilege and behavior analysis, and real-time threat detection, Darktrace gives security teams continuous assurance across AI tools like Bedrock and SageMaker. Organizations can innovate with confidence, knowing their AI systems are governed by adaptive, intelligent protection.

[related-resource]

Learn more about securing AI in the enterprise

Discover how to identify AI-driven risks, so you can secure innovation without exposing the enterprise to new attack surfaces.