In an escalating threat landscape with email as the primary target, IT teams need to move far beyond traditional methods of email security that haven’t evolved fast enough – they’re trained on historical attack data, so only catch what they’ve seen before. By design, they are permanently playing catch up to continually innovating attackers, taking an average of 13 days to recognize new attacks[1].

Phishing attacks are getting more targeted and sophisticated as attackers innovate in two key areas: delivery tactics, and social engineering. On the malware delivery side, attackers are increasingly ‘piggybacking’ off the legitimate infrastructure and reputations of services like SharePoint and OneDrive, as well as legitimate email accounts, to evade security tools.

To evade the human on the other end of the email, attackers are tapping into new social engineering tactics, exploiting fear, uncertainty, and doubt (FUD) and evoking a sense of urgency as ever, but now have tools at their disposal to enable tailored and personalized social engineering at scale.

With the help of tools such as ChatGPT, threat actors can leverage AI technologies to impersonate trusted organizations and contacts – including damaging business email compromises, realistic spear phishing, spoofing, and social engineering. In fact, Darktrace found that the average linguistic complexity of phishing emails has jumped by 17% since the release of ChatGPT.

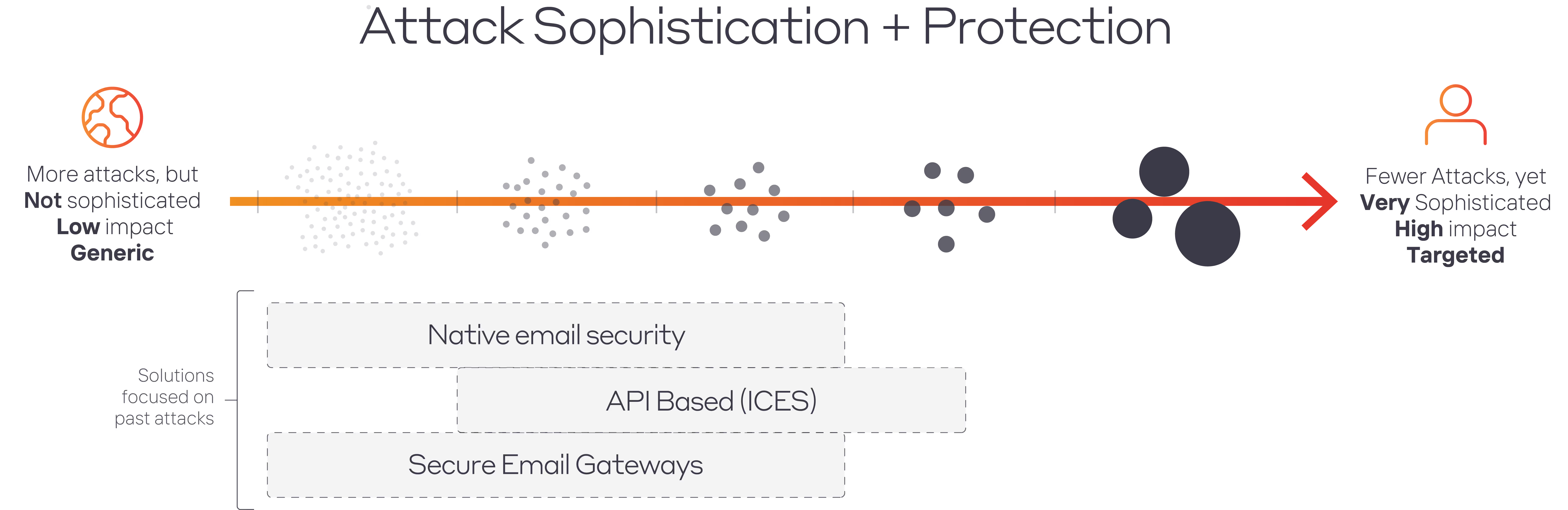

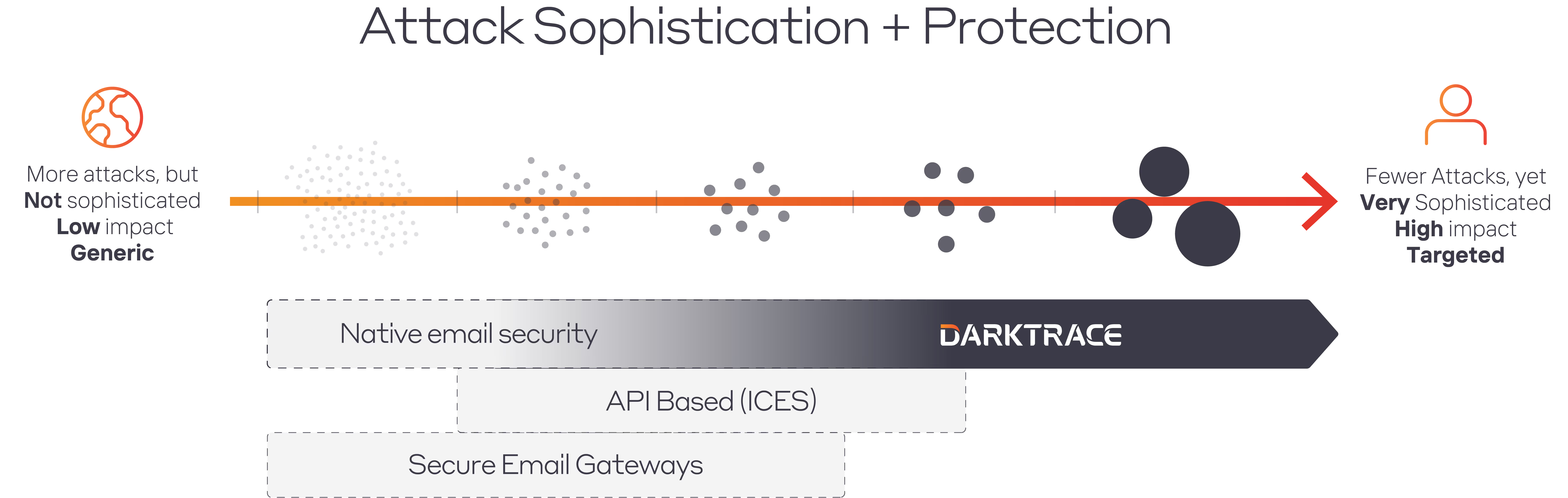

This is just one example of accelerating attack sophistication – lowering the barrier to entry and improving outcomes for attackers. It forms part of a wider trend of the attack landscape moving from low-sophistication, low-impact, and generic phishing tactics - a 'spray and pray' approach - to more targeted, sophisticated, and higher impact attacks that fall outside of the typical detection remit for any tool relying on rules and signatures. Generative AI and other technologies in the attackers' toolkit will soon enable the launch of these attacks at scale, and only being able to catch known threats that have been seen before will no longer be enough.

In an escalating threat landscape with email as the primary target, the vast majority of email security tools haven't evolved fast enough – they’re trained on historical attack data, so only catch what they’ve seen before. They look to the past to try and predict the next attack, and are designed to catch today’s attacks tomorrow.

Organizations are increasingly moving towards AI systems, but not all AI is the same, and the application of that AI is crucial. IT and security teams need to move towards email security that is context-aware and leverages AI for deep behavioral analysis. And it’s a proven approach, successfully catching attacks that slip by other tools across thousands of organizations. And email security today needs to be more about just protecting the inbox. It needs to address not just malicious emails, but the full 360-degree view of a user across their email messages and accounts, as well as extended coverage where email bleeds into collaboration tools/SaaS. For many organizations, the question is not if they should upgrade their email security, but when – how much longer can they risk relying on email security that’s stuck looking to the past?

The Email Security Industry: Playing Catch-Up

Gateways and ICES (Integrated Cloud Email Security) providers have something in common: they look to past attacks in order to try to predict the future. They often rely on previous threat intelligence and on assembling ‘deny-lists’ of known bad elements of emails already identified as malicious – these tools fail to meet the reality of the contemporary threat landscape. Some of these tools attempt to use AI to improve this flawed approach, looking not only for direct matches, but using "data augmentation" to try and find similar-looking emails. But this approach is still inherently blind to novel threats.

These tools tend to be resource-intensive, requiring constant policy maintenance combined with the hand-to-hand combat of releasing held-but-legitimate emails and holding back malicious phishing emails. This burden of manually releasing individual emails typically falls on security teams, teams that are frequently small with multiple areas of responsibility. The solution is to deploy technology that autonomously stops the bad while allowing the good through, and adapts to changes in the organization – technology that actually fits the definition of ‘set and forget’.

Becoming behavioral and context-aware

There is a seismic shift underway in the industry, from “secure” email gateways to intelligent AI-driven thinking. The right approach is to understand the behaviors of end users – how each person uses their inbox and what constitutes ‘normal’ for each user – in order to detect what’s not normal. It makes use of context – how and when people communicate, and with who – to spot the unusual and to flag to the user when something doesn’t look quite right – and why. Basically, a system that understands you. Not past attacks.

Darktrace has developed a fundamentally different approach to AI, one that doesn’t learn what’s dangerous from historical data but from a deep continuous understanding of each organization and their users. Only a complex understanding of the normal day-to-day behavior of each employee can accurately determine whether or not an email actually belongs in that recipient’s inbox.

Whether it’s phishing, ransomware, invoice fraud, executive impersonation, or a novel technique, leveraging AI for behavioral analysis allows for faster decision-making – it doesn’t need to wait for a Patient Zero to contain a new attack because it can stop malicious threats on first encounter. This increased confidence in detection allows for more a precise response – targeted action to remove only the riskiest parts of an email, rather than taking a broad blanket response out of caution – in order to reduce risk with minimal disruption to the business.

Returning to our attack spectrum, as the attack landscape moves increasingly towards highly sophisticated attacks that use novel or seemingly legitimate infrastructure to deliver malware and induce victims, it has never been more important to detect and issue an appropriate response to these high-impact and targeted attacks.

Understanding you and a 360° view of the end user

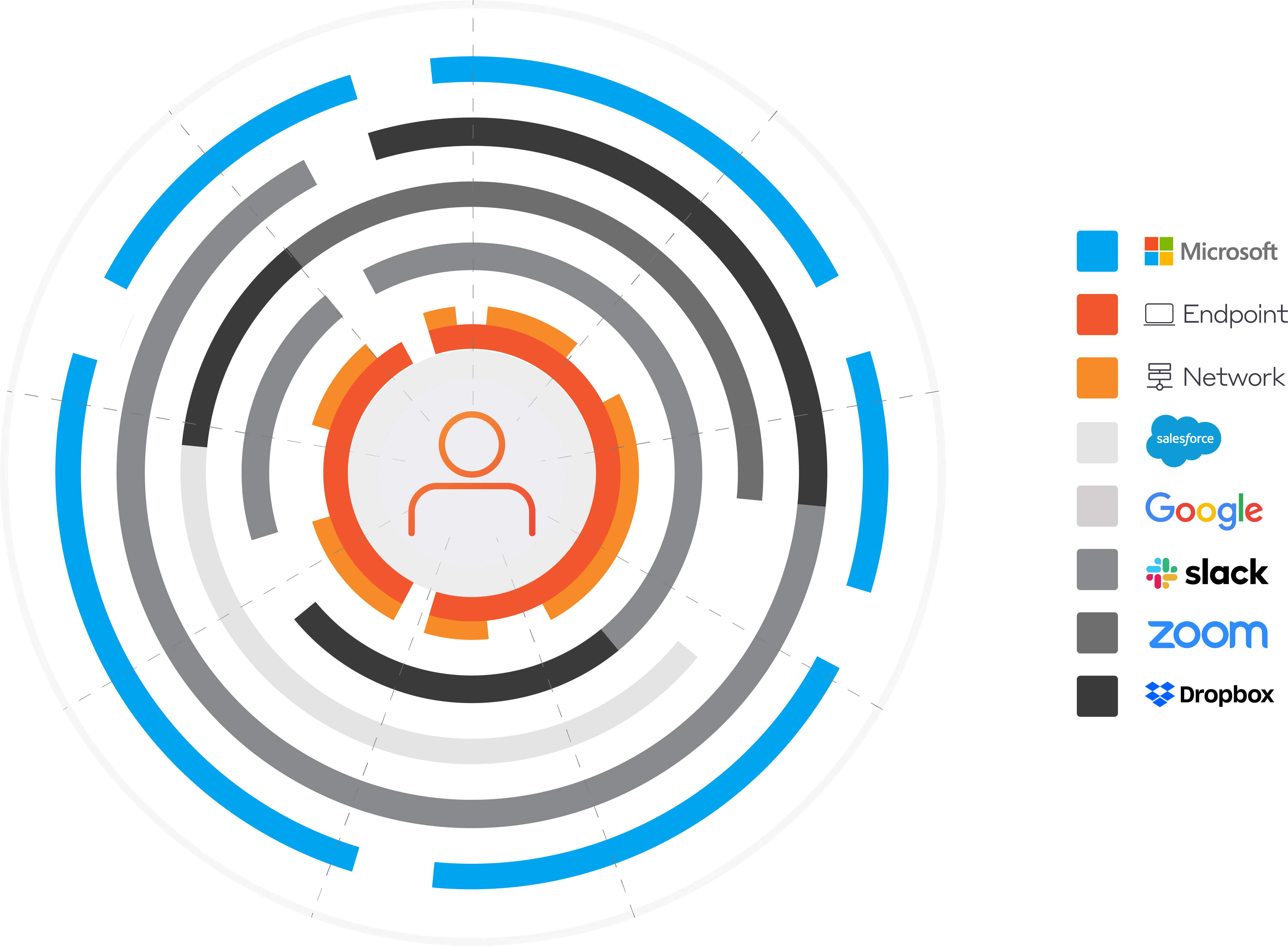

We know that modern email security isn’t limited to the inbox alone – it has to encompass a full understanding of a user’s normal behavior across email and beyond. Traditional email tools are focused solely on inbound email as the point of breach, which fails to protect against the potentially catastrophic damage caused by a successful email attack once an account has been compromised.

In order to have complete context around what is normal for a user, it’s crucial to understand their activity within Microsoft 365, Google Workspace, Salesforce, Dropbox, and even their device on the network. Monitoring devices (as well as inboxes) for symptoms of infection is crucial to determining whether or not an email has been malicious, and if similar emails need to be withheld in the future. Combining with data from cloud apps enables a more holistic view of identity-based attacks.

Understanding a user in the context of the whole organization – which also means network, cloud, and endpoint data – brings additional context to light to improve decision making, and connecting email security with external data on the attack surface can help proactively find malicious domains, so that defenses can be hardened before an attack is even launched.

Educating and Engaging Your Employees

Ultimately, it’s employees who interact with any given email. If organizations can successfully empower this user base, they will end up with a smarter workforce, fewer successful attacks, and a security team with more time on their hands for better, strategic work.

The tools that succeed best will be those that can leverage AI to help employees become more security-conscious. While some emails are evidently malicious and should never enter an employee’s inbox, there is a significant grey area of emails that have potentially risky elements. The majority of security tools will either withhold these emails completely – even though they might be business critical – or let them through scot-free. But what if these grey-area emails could in fact be used as training opportunities?

As opposed to phishing simulation vendors, behavioral AI can improve security awareness holistically throughout organizations by training users with a light touch via their own inboxes – bringing the end user into the loop to harden defenses.

The new frontier of email security fights AI with AI, and organizations who lag behind might end up learning the hard way. Read on for our blog series about how these technologies can transform the employee experience, dynamize deployment, augment security teams and form part of an integrated defensive loop.

[1] 13 days is the mean average of phishing payloads active in the wild between the response of Darktrace/Email compared to the earliest of 16 independent feeds submitted by other email security technologies.

.avif)

.avif)